If we know an interval containing the eigenvalues, it is possible to design a scheme with near optimal convergence.

Let’s start with a splitting-style approach:

This implies that

This is the residual iteration form.

Let’s avoid repeating in all the equations and let’s do the substitution:

Then the iteration is:

This substitution can also be interpreted as solving instead of .

Let’s apply our idea of relaxation:

Let’s use the equality , and subtract:

We get:

where is a polynomial of order with .

Note: there is a variant where we use

Then:

In both cases: with

The polynomial needs to be designed to provide maximum error reduction. Estimating requires having an estimate of an interval containing the eigenvalues of

Assume that the eigenvalues of are in the interval .

Then define the following polynomial:

where is a Chebyshev polynomial. Here we also used a shift-and-scale transformation of :

We have . Moreover when :

So and

So as before and for

We can prove the following bound:

We can expect a rapid convergence. But this algorithm requires a good estimation of and .

We can derive a more precise bound for

We have for :

where

Denote by

Then using :

Moreover:

Finally:

with

This expression can be simplified. Assume that the interval containing the eigenvalues is small: and . Then and . We get:

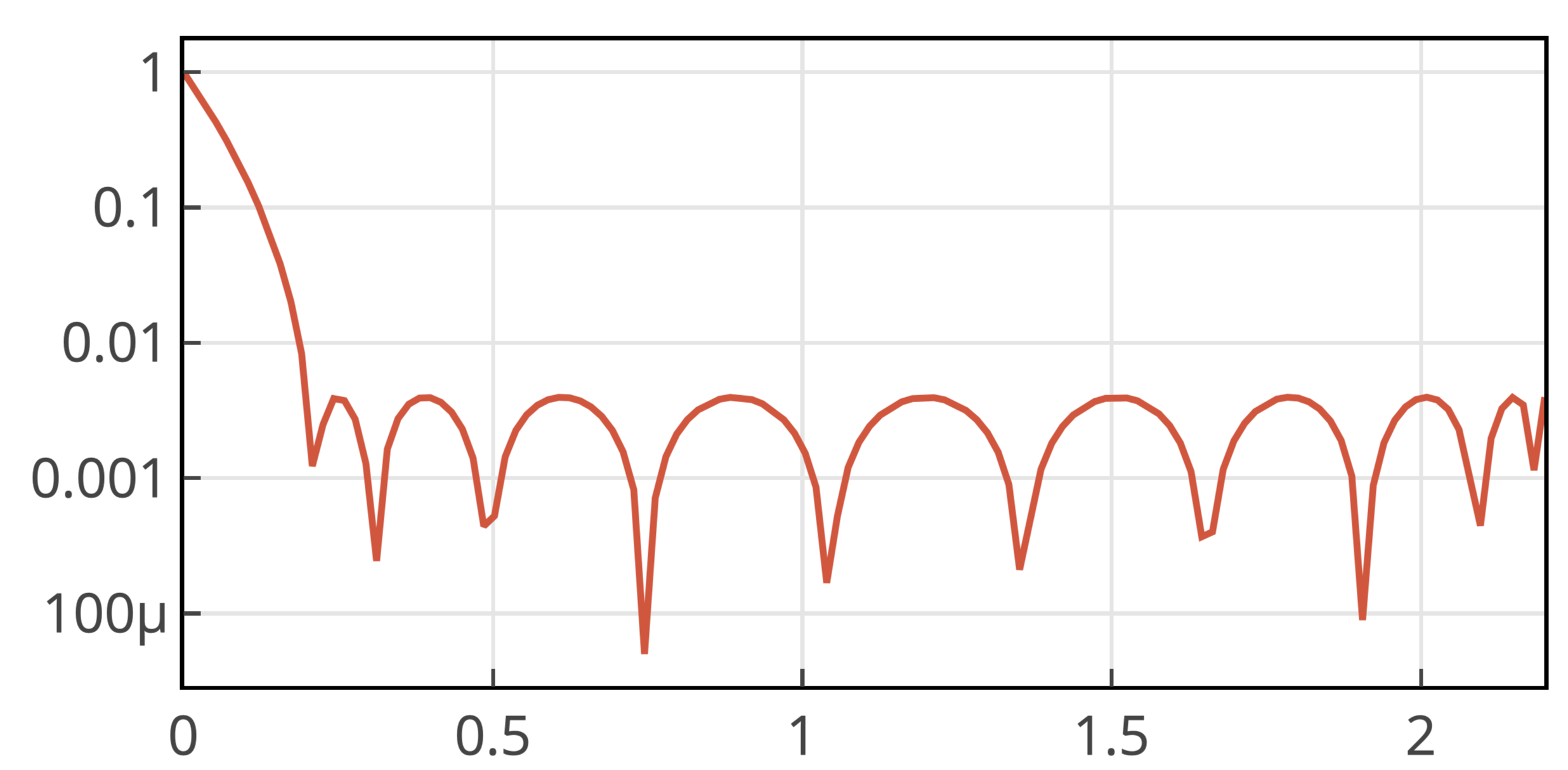

The convergence is very fast. The figure below shows the polynomial with and .