What if is not full column rank?

- The QR factorization exists but is not unique.

- is singular.

- So:

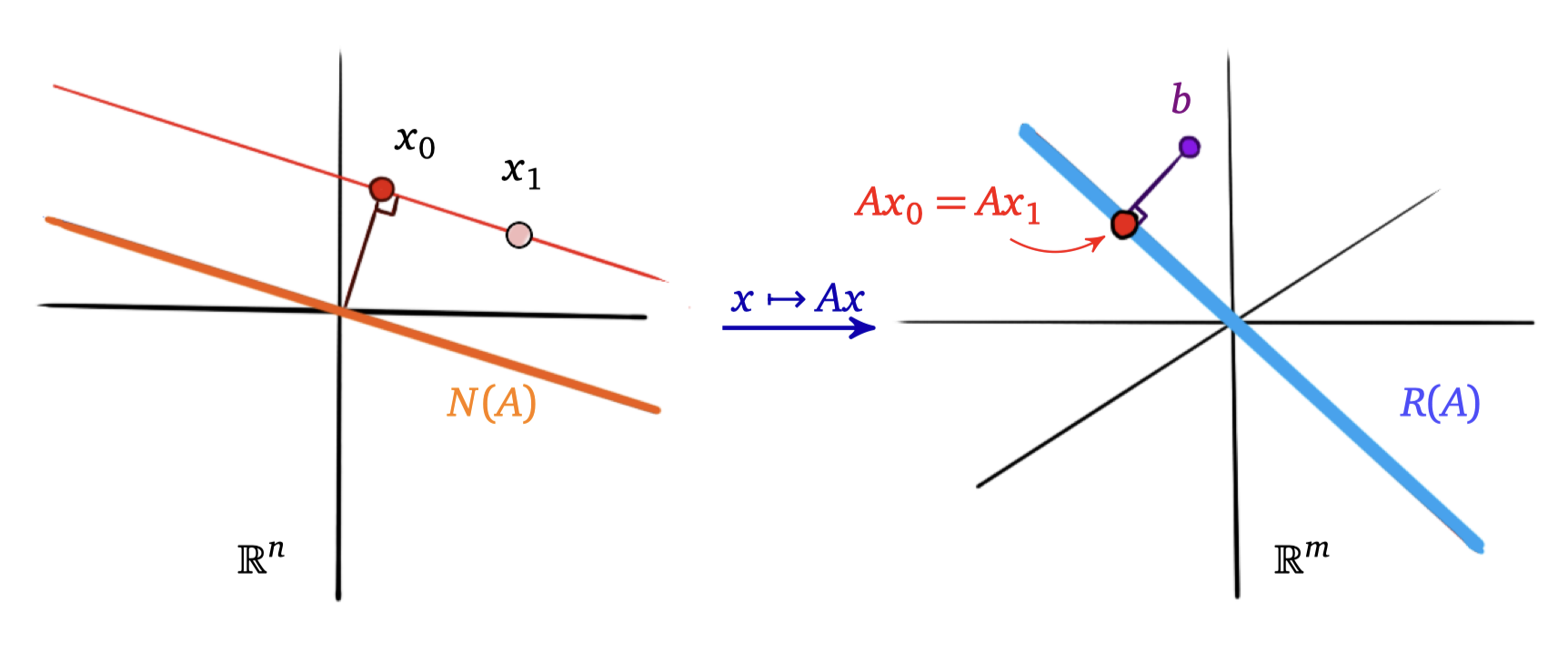

The problem is that the solution of the least-squares problem is non-unique.

- We need to look for the solution with minimum norm.

- This solution is unique.

- It satisfies two conditions:

- : it solves the least-squares problem.

- , that is, the solution has a minimum 2-norm.

Here are the solution steps.

Let’s start with the thin SVD:

Shape of matrices:

- Because is not full column rank, we have that the rank satisfies .

- This is why matrix is thin. and both have columns. has rows and has rows. has size .

Let’s go back to the equation

This is equivalent to:

- But does not uniquely define .

- We need to add the condition that . This guarantees that has minimum 2-norm.

- From :

- Note that because is not full column rank, its null space is non-trivial.

- As a result is a thin matrix and span() is non-trivial as well.

- Let’s now search for the solution :

- The solution is then

since .

- That system has a unique solution in because all the singular values , , are non-zero.

- The final solution is therefore

The computational cost to calculate the SVD is .

Summary

-

Dimensionality reduction: By using the thin SVD, we’re effectively reducing the problem to a smaller subspace where behaves well (invertible scaling via ). This avoids issues that arise from attempting to invert a singular matrix directly.

-

Orthogonal decomposition: The SVD provides an orthogonal basis for both the row and column spaces of . By projecting onto the column space via , we’re capturing the component of that can “reach”.

-

Decoupling the problem: The diagonal nature of means that each component of (and thus ) can be solved independently. This decoupling simplifies the problem from solving a potentially complex system of equations to handling straightforward, individual equations.

-

Avoiding the null space: By ensuring is in the span of (i.e., ), we eliminate any arbitrary components that could exist in the null space of . This is essential for finding the minimum-norm solution.

In practical applications, especially when dealing with data that may be noisy or ill-conditioned, finding a stable and unique solution is critical. The SVD provides a robust framework for:

-

Handling rank deficiency: Even when lacks full rank, the SVD allows us to work within the subspace where is effective.

-

Numerical stability: By avoiding the inversion of (which can be ill-conditioned or singular), we reduce numerical errors and improve the stability of our computations.

-

Interpretability: The SVD not only helps in solving the least-squares problem but also offers insights into the underlying structure of , such as its rank and the significance of its singular values.

Least-squares problems, Singular value decomposition, Method of normal equation, Least-squares solution using QR